马尔科夫决策过程

一个马尔科夫决策过程由$5$元组$(S,A,P_{\cdot}(\cdot,\cdot),R_{\cdot}(\cdot,\cdot),\gamma)$描述,其中

$S$是有限的状态集合;

$A(s)$是有限的动作集合, $s\in S$;

$R_{a}(s,s’)$是从状态$s$采取动作$a$转移到状态$s’$的及时报酬,也常记为$R(s)$或者$R(s,a)$;

$P_a(s,s’)=P(s_{t+1}=s’|s_t=s,a_t=a)$是从状态$s$采取动作$a$转移到状态$s’$的概率;

$\gamma\in[0,1]$是一个折扣因子,表示了不同时间报酬的重要性。

MDP目标是寻找一个策略$\pi(s)\rightarrow a$以最大化长时间的报酬期望。在强化学习领域中,我们研究的马尔科夫决策过程策略是最简单的历史无关、确定性策略。

总报酬

状态$s$的效用函数或值函数$V(s)$从时间$t$开始的总折扣报酬,

可以证明

策略

最优策略满足

在给定策略$\pi$的条件下,长期折扣报酬期望为

这与即时报酬$R(s)$不同.

由贝尔曼方程,值函数可以分解为两部分:即时报酬$R(s)$和下一阶段的值函数乘上折扣因子$\gamma V(S_{t+1})$.

相似结论也对$V^{\pi}(s)$成立.

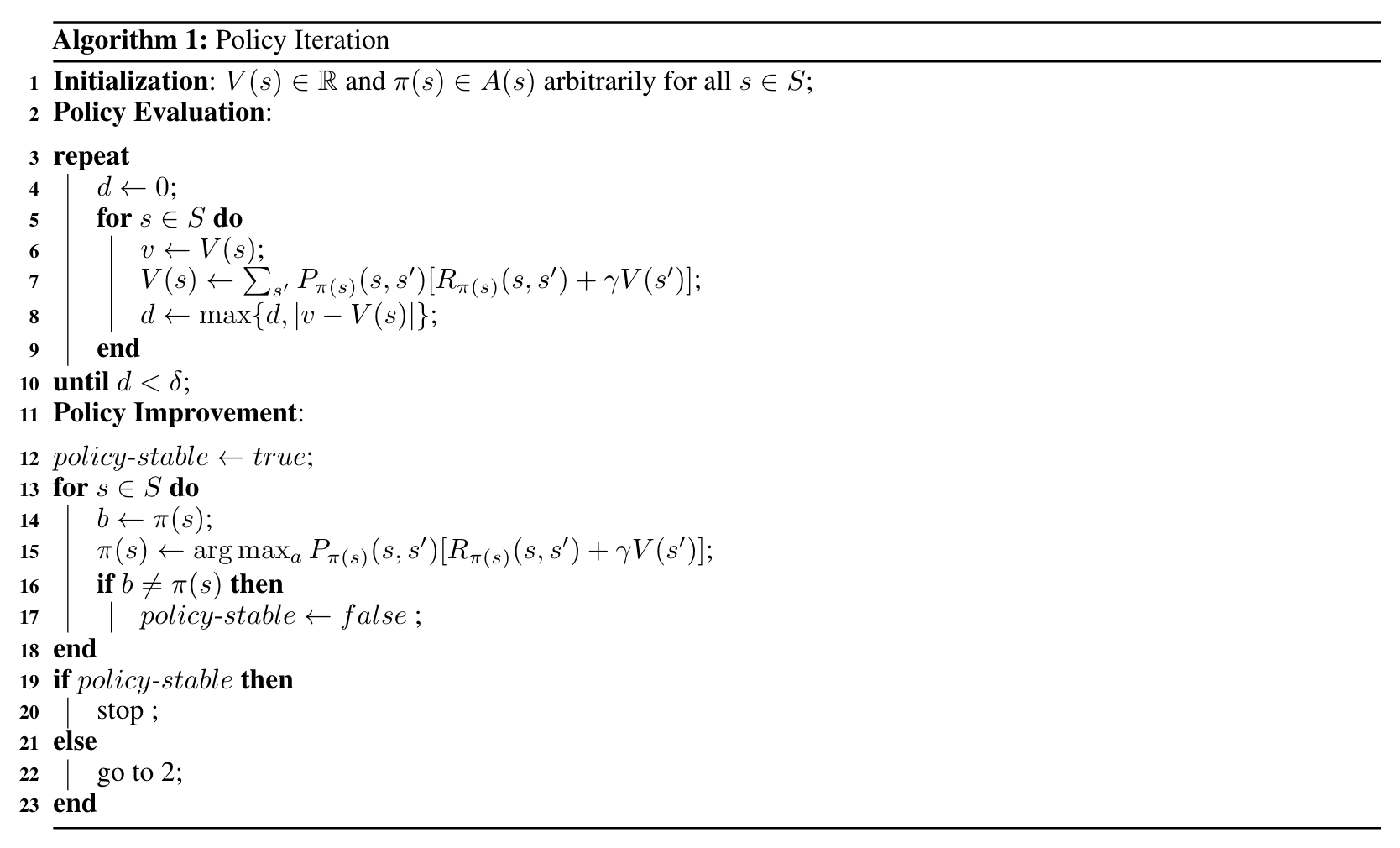

策略迭代强化学习

策略迭代尝试轮流评估、改善策略。一旦一个策略$\pi$通过值函数$V^{\pi}$改善后,得到更优策略$\pi’$,我们可以计算新的值函数$V^{\pi’}$并且继续改进策略。因此我们可以得到一个单调改善的策略序列:

其中$\xrightarrow{E}$表示策略评估,$\xrightarrow{I}$表示策略改善。在这个过程,每个策略都严格优于前一个策略,除非该策略已经是最优的。因为有限马尔科夫决策过程只有有限个策略,这保证了算法的收敛性。

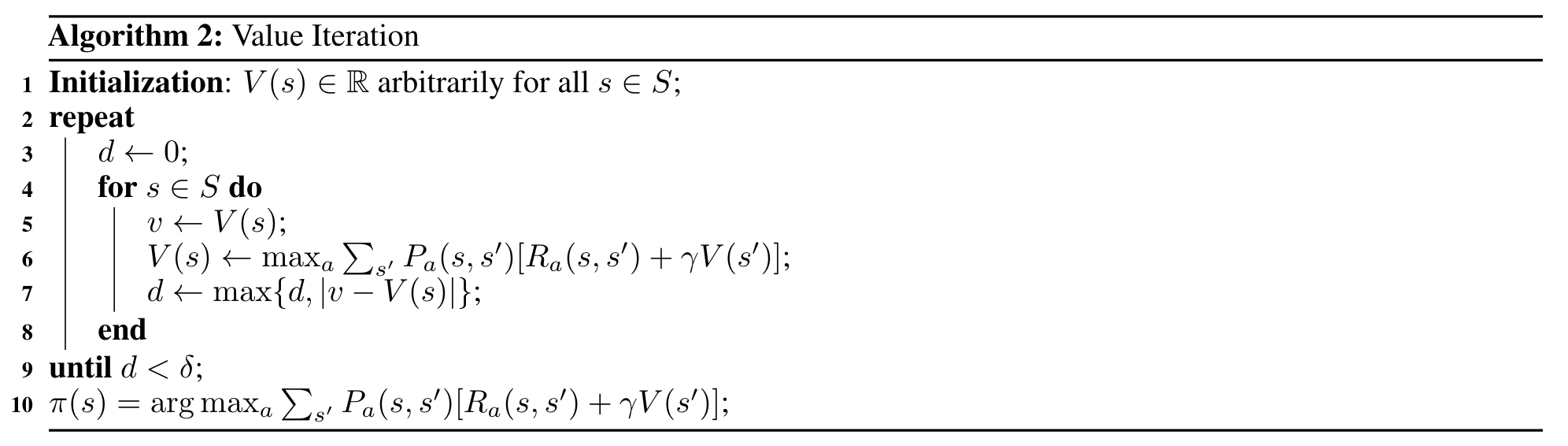

值迭代强化学习

策略迭代的一个缺陷是每一次迭代中的策略评估需要扫描全部状态。如果我们在每一次迭代只进行一次策略评估和策略改善,那么收敛得到的值函数对应的也应该是最优策略对应的值函数,在下式,我们并没有直接求出改善后的策略,而是直接最大化值函数:

对任意$s\in S$。对任意$V_0$,可以证明序列$\{V_k\}$收敛到$V^{\ast}$,只要$V^{\ast}$存在。

代码

1 | import numpy as np |

策略迭代强化学习

1 | def policy_evaluation(env, policy, gamma=1.0): |

模型评估

1 | def run_episode(env, policy, gamma = 1.0, render = False): |

值迭代强化学习

1 | def extract_policy(v, gamma = 1.0): |

模型评估

1 | def run_episode(env, policy, gamma = 1.0, render = False): |