Markov Decision Process

A Markov decision process is a $5$-tuple $(S,A,P_{\cdot}(\cdot,\cdot),R_{\cdot}(\cdot,\cdot),\gamma)$, where

$S$ is a finite set of states.

$A$ is a finite set of actions (alternatively, $A(s)$ is the finite set of actions available from state $s$).

$R_{a}(s,s’)$ is the immediate reward (or expected immediate reward) received after transitioning from state $s$ to state $s’$, due to $a$. It can also be like $R(s)$ or $R(s,a)$.

$P_a(s,s’)=P(s_{t+1}=s’|s_t=s,a_t=a)$ is the probability that action $a$ in state $s$ at time $t$ will lead to state $s’$ at time $t+1$.

$\gamma\in[0,1]$ is a discount factor, which represents the difference in importance between future rewards and present rewards.

The solution to the MDP is the policy $\pi(s)\rightarrow a$, which maximizes the long-term expected reward. In reinforcement learning domains, we simply assume the policy is deterministic and history-independent.

Total Reward

$V(s)$, the state value function, is the total discounted reward from time-step $t$,

We can show that

Policies

The long term and delayed reward is given by

which is not equal to the immediate reward $R(s)$.

From Bellman Equation, the value function can be decomposed into two parts:immediate reward $R(s)$ and discounted value of successor state $\gamma V(S_{t+1})$.

Similar result holds for $V^{\pi}(s)$.

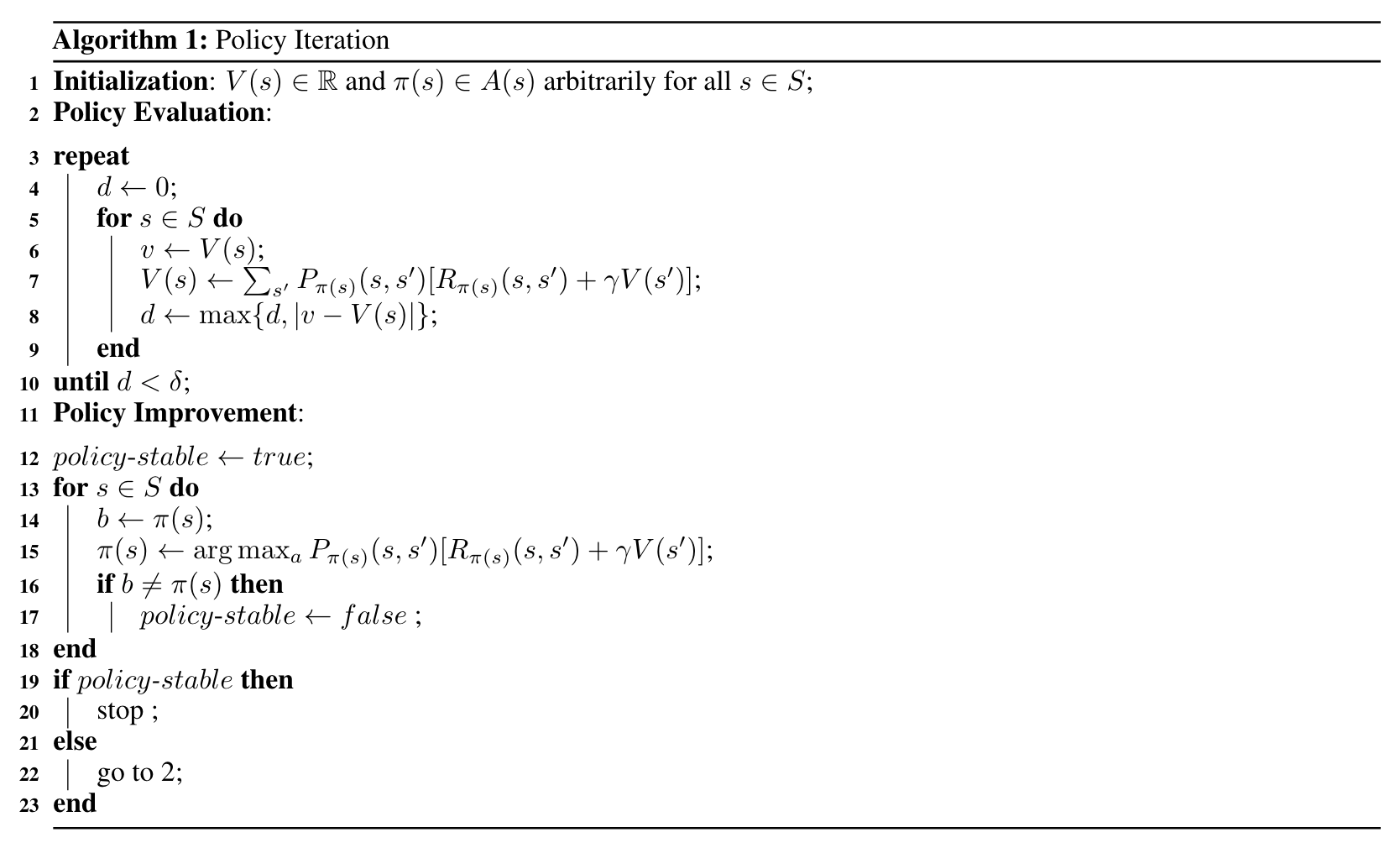

Policy-Iterated RL

Policy iteration tries to evaluate and improve the policy in turn. Once a policy $\pi$ has been improved by using $V^{\pi}$ to yield a better policy $\pi’$, we can then compute $V^{\pi’}$ and improve it again to yield an even better policy. We can thus obtain a sequence of monotonically improving policies and value functions:

where $\xrightarrow{E}$ denotes a policy evaluation and $\xrightarrow{I}$ denotes a policy improvement. Each policy is guaranteed to be a strict improvement over the previous one (unless it is already optimal). Because a finite MDP has only a finite number of policies, this process must converge to an optimal policy and optimal value function in a finite number of iterations.

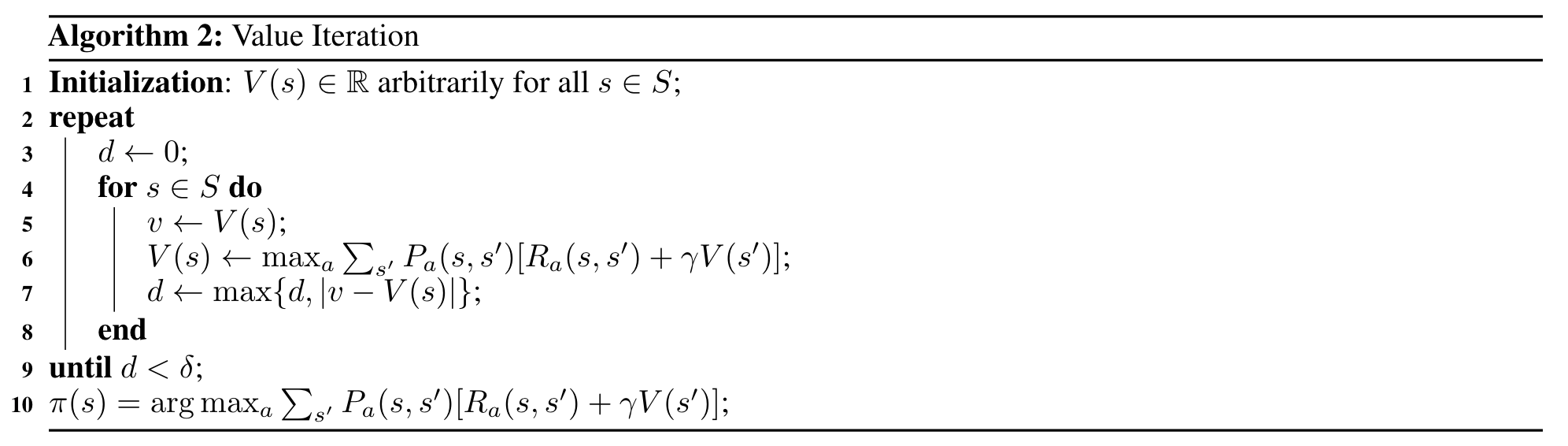

Value-Iterated RL

One drawback to policy iteration is that each of its iterations involves policy evaluation, which may itself be a protracted iterative computation requiring multiple sweeps through the state set. If policy evaluation is done iteratively, then convergence exactly to occurs only in the limit.

In fact, the policy evaluation step of policy iteration can be truncated in several ways without losing the convergence guarantees of policy iteration. One important special case is when policy evaluation is stopped after just one sweep (one backup of each state). This algorithm is called value iteration. It can be written as a particularly simple backup operation that combines the policy improvement and truncated policy evaluation steps:

for all $s\in S$. For arbitrary $V_0$, the sequence $\{V_k\}$ can be shown to converge to $V^\ast$ under the $V^\ast$.

Code Example

1 | import numpy as np |

Policy-Iterated RL

1 | def policy_evaluation(env, policy, gamma=1.0): |

Evaluation

1 | def run_episode(env, policy, gamma = 1.0, render = False): |

Value-Iterated RL

1 | def extract_policy(v, gamma = 1.0): |

Evaluation

1 | def run_episode(env, policy, gamma = 1.0, render = False): |