Introduction

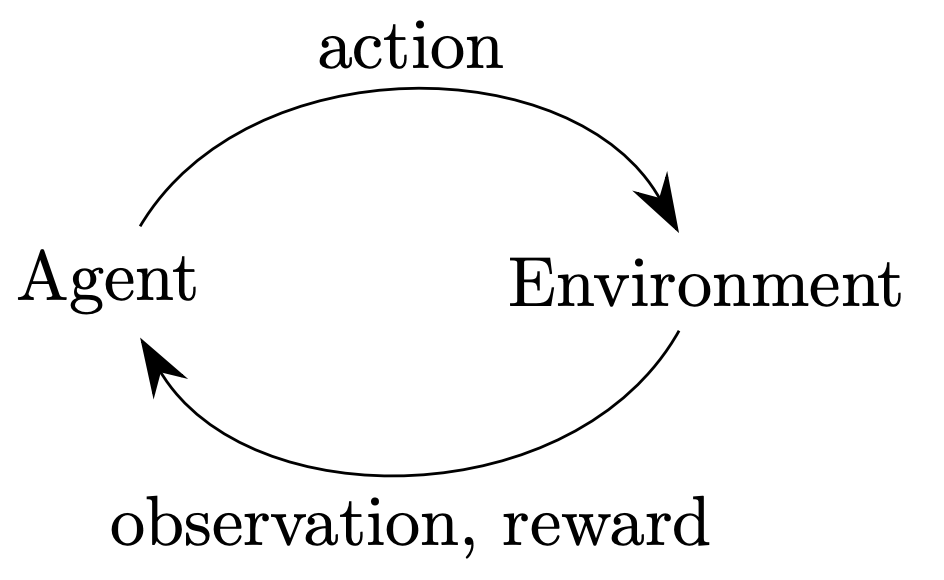

Reinforcement learning is a computational goal-directed approach to learning from interaction with environment.

- trial-and-error search

- delayed reward

We can use ideas from dynamical systems theory to formulate a reinforcement learning problem, specifically, as the optimal control of incompletely-known Markov decision processes. A learning agent must be able to sense the state of its environment to some extent and must be able to take actions that affect the state. The agent also must have a goal or goals relating to the state of the environment.

One of the challenges that arise in reinforcement learning, and not in other kinds of learning, is the trade-off between exploration and exploitation. The agent has to exploit what it has already experienced in order to obtain reward, but it also has to explore in order to make better action selections in the future.

Methods based on general principles, such as search or learning, were characterized as “weak methods,” whereas those based on specific knowledge were called “strong methods.”

Taxonomy

In reinforcement learning, we have two orthogonal choices: what kind of objective to optimize (involving a policy, value function, or dynamics model), and what kind of function approximators to use.

First, we have two kinds of algorithms:

- Model-based RL algorithms: based on dynamics model that tends to predict the next state and the reward at the next step;

- Model-free RL algorithms: not based on a dynamics model.

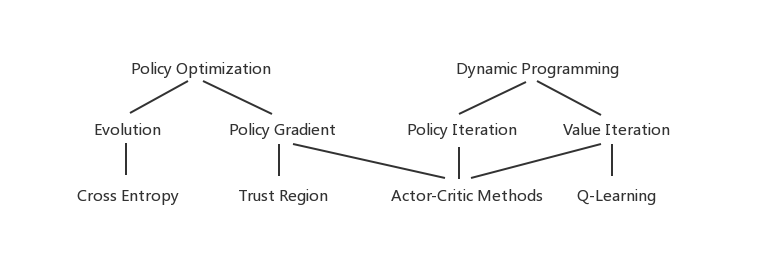

Then, for model-free RL algorithms, the figure below shows a taxonomy:

Policy optimization methods are centered around the policy, the function that maps the agent’s state to its next action. These methods view reinforcement learning as a numerical optimization problem where we optimize the expected reward with respect to the policy’s parameters. There are two ways to optimize a policy.

- Derivative free optimization (DFO) algorithms, including evolutionary algorithms, like genetic programming, simulated annealing, etc.;

- Policy gradient methods.

Approximate dynamic programming (ADP) focus on learning value functions, which predict how much reward the agent is going to receive.

Finally, there are actor-critic methods that combine elements from both policy optimization and dynamic programming. These methods optimize a policy, but they use value functions to speed up this optimization, and often use ideas from approximate dynamic programming to fit the value functions.

Elements of Reinforcement Learning

- a policy;

- a reward signal;

- a value function;

- (optionally) a model of the environment.